What is reasoning? For many, it’s a word redolent of cryptic picture puzzles and fiendish maths tests designed to get you into grammar school.

Until recently, machines weren’t expected to reason at all—they simply followed instructions.

Now that’s beginning to change.

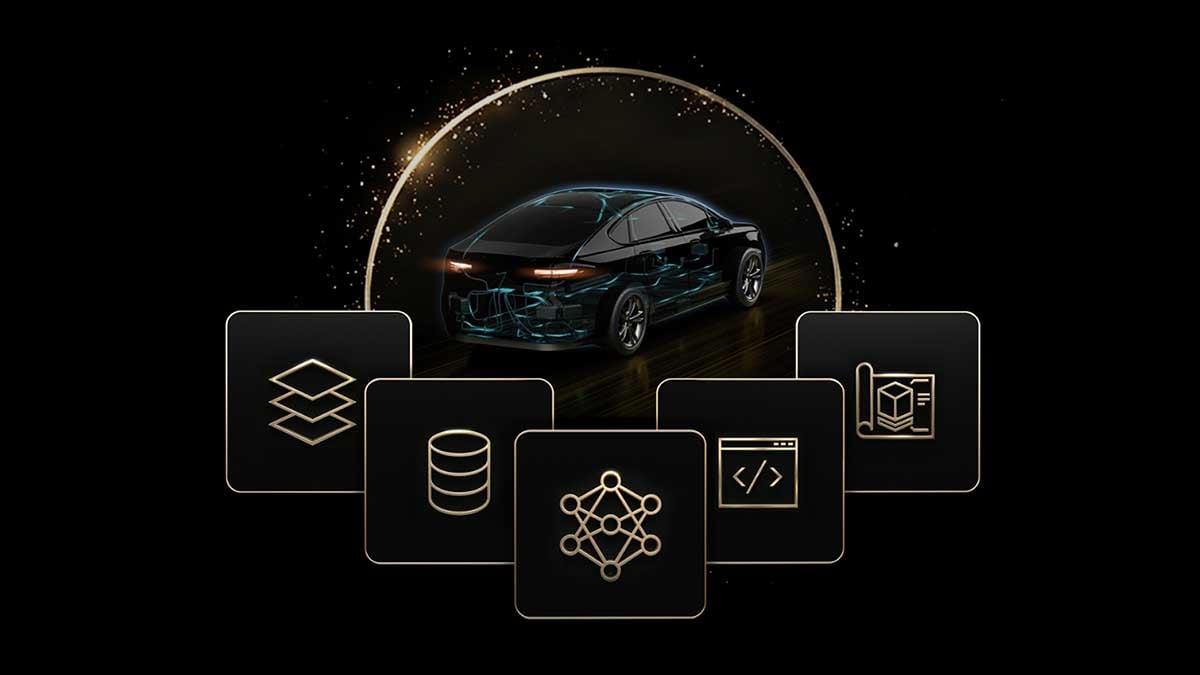

Earlier this month, chip maker NVIDIA unveiled a new platform which it says aims to give autonomous vehicles the ability to think through complex situations, infer likely outcomes, and even explain their decisions—much like a pupil justifying the steps of a tricky logic problem.

Announcing the new Alpamayo platform at the annual CES technology conference in Las Vegas, CEO Jensen Huang said the innovation was a breakthrough in autonomous driving.

Instead of simply recognising lanes, traffic lights, or pedestrians, Huang said a reasoning-capable car can weigh probabilities, anticipate unusual behaviours, and respond to rare or unpredictable events in ways that traditional autonomous systems cannot.

Huang added that NVIDIA is working with Mercedes-Benz to produce a driverless car powered by Alpamayo. The vehicle is expected to be released in the US in the coming months, before being rolled out across Europe and Asia. A video demonstration shown during the keynote featured an AI-powered Mercedes-Benz driving through the streets of San Francisco, while a passenger sat behind the steering wheel with their hands resting in their lap.

“It drives so naturally because it learned directly from human demonstrators,” Huang told the audience. “But in every single scenario, it tells you what it’s going to do, and it reasons about what it’s about to do.”

For NVIDIA, which has built its business on creating the sort of high-powered chips needed to deploy and scale AI, the announcement is part of a shift towards enabling physical AI applications.

Rather than the sort of large language models (LLM) exemplified by ChatGPT, this new platform is based around a reason-based vision language action model (VLAM) known as Alpamayo 1.

Unlike traditional systems, which often separate perception, decision-making, and action into distinct modules, reasoning models attempt to integrate them. A car can process what it sees, infer likely outcomes, and decide on the safest course of action—all while keeping a record of its logic. This end-to-end reasoning is intended to address the “long tail” of rare or complex scenarios that have long bedevilled autonomous driving.

According to NVIDIA, this means that rather than simply obeying traffic lights or maintaining a lane, EVs will be able to interpret a dynamic environment and making judgements about situations the vehicle has never encountered before.

Frank McCleary, Partner at US management consulting firm Arthur D. Little, likens it to the way humans drive. “If you think about how you drive, you infer situations,” he explains. “You see someone looking around, potentially about to cross without waiting for the light to change, or a child playing on the pavement, and you become more cautious. That logic and reasoning is the real challenge with autonomy.”

Alpamayo’s ambitions place it in direct competition with existing autonomous systems, particularly those developed by Tesla and Waymo.

Elon Musk has long maintained that autonomy is primarily a problem of scale and data: collect enough driving footage, refine neural networks, and full self-driving will follow.

Yet McCleary cautions that this approach leaves gaps when faced with unusual scenarios. “The biggest challenge is those rare and complex scenarios,” he says. “That’s the gating factor that will determine the speed of adoption of higher levels of autonomy.”

Until now, most self-driving systems have relied heavily on pattern recognition. They perform well in common scenarios but can struggle when confronted with unusual or “long-tail” events, such as a delivery drone encountering an ambulance or a vehicle misreading a private road as a through street.

Reasoning models aim to tackle exactly these gaps. By integrating perception, inference, and decision-making, they can address situations that a traditional system might misinterpret or fail to recognise entirely. McCleary gives practical examples: “There are hundreds of thousands of edge cases that you and I driving don’t really notice, but we solve automatically. Someone starts merging, has their indicator on but isn’t actually moving—how do you respond? That’s where reasoning becomes essential.” Similarly, a delivery drone failing to recognise an approaching ambulance or a RoboTaxi attempting to drive down a private driveway illustrates the type of uncommon events reasoning models are designed to manage.

This transparency matters not only for regulators and passengers but also for insurers and manufacturers. As McCleary points out, “With autonomy, it’s really important for regulators, insurers, and OEMs to understand what logic was used to reach a decision. What was the perception, what information was taken in, and how did the model get there?” In a world where the vehicle, not the human, is making split-second decisions, reasoning and explainability are emerging as essential tools for trust, safety, and commercial viability.

Beyond safety, reasoning and explainability also intersect with insurance and liability. McCleary points out that when an AI system makes decisions, responsibility shifts away from the human driver. “You now have a computer making decisions,” he says. “So what does insurance look like when you’re just the passenger?” Reasoning models that log every decision in detail could allow insurers to determine fault more accurately, potentially enabling differentiated pricing based on vehicle hardware, software stacks, or real-world performance. In other words, the transparency that reasoning provides may not only make autonomous vehicles safer, but also reshape the economics of the industry.

Alpamayo is an open-source AI model, with the underlying code now available on machine learning platform Hugging Face, where autonomous vehicle researchers can access it for free and retrain the model.

Shreyasee Majumder, Social Media Analyst at GlobalData says this is a shrewd tactical move.

“By open-sourcing the model, NVIDIA is effectively positioning itself as the Android of autonomy and creating an ecosystem where its high-compute hardware becomes the essential foundation for the automotive industry,” she says.

McCleary notes that the open-source nature of Alpamayo could have significant industry implications. By providing a flexible foundation, Nvidia is lowering the barrier to entry for traditional carmakers and smaller startups alike, potentially accelerating adoption across the market.

“It gives OEMs a boost in terms of starting point,” he says. “You don’t have to begin with a blank sheet of paper. You can retrain an open model and save a significant amount of engineering effort.”

There’s plenty of other editorial on our sister site, Electronic Specifier! Or you can always join in the conversation by visiting our LinkedIn page.

Bringing over two decades in journalism, Lucy Barnard has reported on everything from London’s property chessboard to Abu Dhabi’s vertical ambitions — and always delivered the story before deadline. Lucy is interested in how technology intersects with major global challenges, from climate change to gender equality and social justice. She believes innovation isn’t just about efficiency or convenience—it’s a powerful tool for building a fairer, more sustainable world.

Copyright 2026 – IOT Insider