News

Reviews

Buyer's Guide

Events

Ask MOTORTREND

We saw fancy radar that images almost as clearly as lidar, lidar almost as cheap as radar, and more.

Full vehicle autonomy has been about two years away for a decade or more, but at CES 2026—as happened at its past umpteen predecessors—we got a preview of promising new technologies that claim they’ll hasten this perennially tardy technology.

Today’s radar (RAdio Detection And Ranging measurement technique) as used in automotive advanced driver assistance systems (ADAS) typically operates in the 24 to 81 gigahertz (GHz) electromagnetic frequency range. Lidar (Light Detection And Ranging) operates in the near infrared 193 to 331 terahertz (THz) range. Teradar is radar using the 0.3-3.0 THz range.

The equipment to transmit and computing power required to process the return radio signals are typically cheaper and less complicated than what’s needed to run lidar. Increasing the frequency (decreasing the wavelength) by a factor of roughly 13, as Teradar does, improves angular resolution by that same 13x factor. This, in turn, improves surface perception to deliver more lidar-like point clouds that look a bit more like a camera might have recorded them. So instead of just detecting something is there, Teradar can start to determine what and how big the object is.

Another big Teradar advantage over lidar: fog droplets (1-10 micrometers) and rain droplets (100-1,000 micrometers) easily diffract a 1-micrometer laser pulse, but the 100-300 micrometer Teradar signals are vastly less affected. Hence Teradar claims to exist in a sweet spot: short enough for optimal geometry, long enough to ignore aerosol particles.

So, to recap, Teradar delivers better shape definition than traditional millimeter-wave radar, much better weather robustness than lidar, can be mounted behind plastic bumper fascias, don’t require optical cleaning, is not sensitive to low lighting conditions, and provides true velocity measurement. Teradar costs more than millimeter-wave radar. Today it’s still in the low three-digit price range, but in time it should be quite competitive with the most affordable solid-state flash lidar products like those of MicroVision (see below).

Requirements are coming in 2029 for vehicles to be able to detect pedestrians and vulnerable road users front and rear for automatic emergency braking. Corner Teradar units provide this while also delivering sufficient resolution to operate parking systems, eliminating those ugly ultrasonic sensors that have been blighting bumper fascias forever.

Terahertz radar was documented in the 1960s, so why are we just now getting it? Moore’s Law. Teradar needs to be processed on a sophisticated chip of the type that the industry is just now growing into. Expect to see Teradar bringing down the price of autonomy by 2028 with one or more of the five automakers currently working with it.

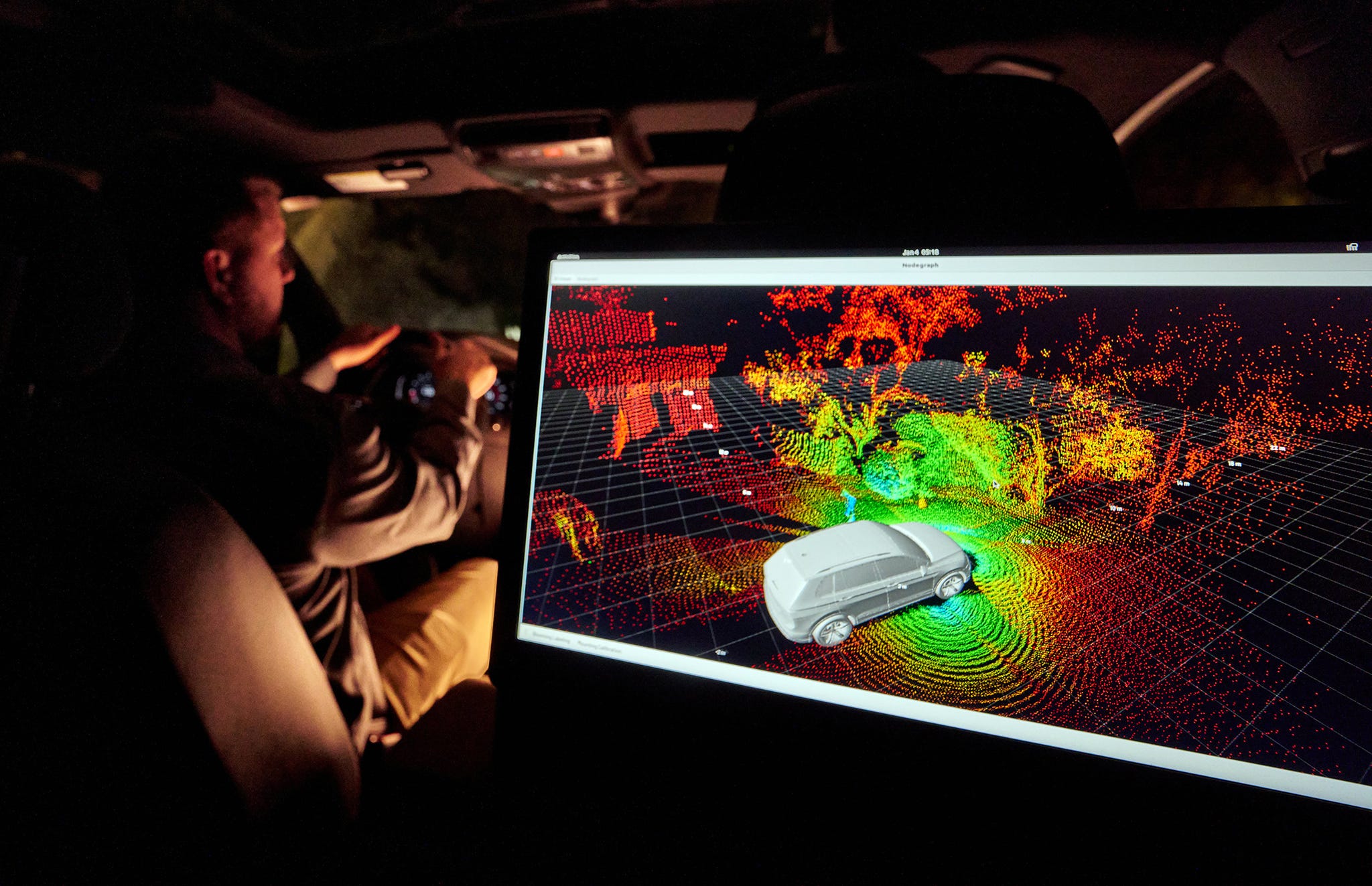

MicroVision’s point cloud

Not so fast, Teradar! MicroVision hopes to democratize lidar to the point that it can improve the fidelity of regular ADAS features like adaptive cruise control and automatic emergency braking. We covered MicroVision’s Movia S solid-state flash lidar when it was introduced at the IAA show in 2025, but that system’s Tri-Lidar approach still used a more conventional Micro-Electro-Mechanical System (MEMS) with chip-steered mirrors aiming the laser pulses.

But MicroVision just acquired Scantinel Photonics GmbH, a German developer of 1550nm frequency-modulated continuous-wave FMCW lidar technology. As we previously covered, the reflection from this fancier laser’s continuous wave, experiences a Doppler shift (as with sound or radio waves), allowing FMCW to know the velocity along with the location of the thing the light bounced off of. Scantinel’s big advance is having figured out how to print all the optical functions (lasers, waveguides, modulators, detectors) on a CMOS-compatible silicon chip.

There’s a lot of work to be done, but MicroVision expects to integrate this solid-state FMCW long-range radar into its four- or five-lidar perception stack, priced to support level 2 or 2+ ADAS systems in mainstream vehicles by the mid-2030s. In the meantime, MicroVision is working to build scale (and hence lower pricing) on its solid-state flash lidar by integrating it into industrial applications like automated forklifts and in security/defense applications like surveillance drones. We got a demo ride in a MicroVision test car and were impressed with its detailed point cloud imaging, which can discern paint lines, curbs, cars, and pedestrians with good fidelity.

There’s sensing, and then there’s making sense of what’s been sensed. The brainiacs who founded Neural Propulsion Systems (now renamed Atomathic) reckon that the lousy images returned by most of today’s mainstream radar sensors is simply “a math problem”—and one they’re eager to solve with AI (hence AI Detection And Ranging). Here’s the deal: A typical automotive radar may send 16 radio signals out into the world. They bounce off of everything, returning loads of responses—many of which appear to be gibberish. So, the systems report the position and velocity of the signals they’re most confident in (maybe the top 16) as points. ADAS systems fuse this info with camera data to figure out what, exactly, that dot probably represents.

Atomathic likens this response to answering a question with the first thought that pops into your head, versus the result you may give if you stopped to think about it more deeply. When a frame of raw data comes in, as happens 10 to 15 times per second, the Atomathic algorithms generate six to eight hypotheses as to what all might be out there. Then, AI sorts through the hypotheses to select the most likely one(s). Note that this AI is not trained on data, like a large-language model. Rather, it is informed strictly by the immutable laws of physics.

The result? Now the UPS driver walking next to his or her flat-backed metal truck—an object 10,000 times more reflective than a softly clothed human—are both recognized. And they’re much more recognizable for what they are, making them simpler to fuse with the camera’s imagery.

The algorithms don’t much care which wavelength of radar is feeding it data, but they do insist on raw, unfiltered and unprocessed data. Generating the hypotheses takes considerable computing power, but today’s latest zonal and tomorrow’s centralized electronic architecture are likely to have more than enough computing power.

At that point, Atomathic’s algorithms could potentially become an over-the-air update that greatly improves the fidelity of the vehicle’s radar sensing and hence the safety of its driver-assist or autonomy systems. But only after said algorithms have been extensively tested. Expect that to take a few more years at least, though the only cost involved will likely be IP licensing and the compute power, but myriad other programs will have already demanded that.

I started critiquing cars at age 5 by bumming rides home from church in other parishioners’ new cars. At 16 I started running parts for an Oldsmobile dealership and got hooked on the car biz. Engineering seemed the best way to make a living in it, so with two mechanical engineering degrees I joined Chrysler to work on the Neon, LH cars, and 2nd-gen minivans. Then a friend mentioned an opening for a technical editor at another car magazine, and I did the car-biz equivalent of running off to join the circus. I loved that job too until the phone rang again with what turned out to be an even better opportunity with Motor Trend. It’s nearly impossible to imagine an even better job, but I still answer the phone…

Read More

Join Newsletter

Subscribe to our newsletters to get the latest in car news and have editor curated stories sent directly to your inbox.

Explore Offerings

A Part of Hearst Digital Media

©2026 Hearst Autos, Inc. All Rights Reserved.

Report Issue

Cookie Choices

Follow us